In the complex architecture of the modern internet, certain technologies form the bedrock, ensuring the seamless delivery of websites and applications. NGINX (pronounced "engine-x") stands prominently among these foundational components. If you've ever wondered how the world's busiest websites handle massive traffic loads with apparent ease, chances are high that NGINX is working diligently behind the scenes. It's an incredibly versatile open-source powerhouse, widely deployed as a high-performance web server, an intelligent reverse proxy, a robust load balancer, an efficient HTTP cache, and even a secure API gateway – often fulfilling several of these critical roles simultaneously.

Born from the imperative to overcome the limitations of traditional web servers facing rapidly escalating internet traffic, NGINX was conceived by Russian software engineer Igor Sysoev in 2002 and publicly released in 2004. Its primary objective was to conquer the notorious "C10k problem" – the challenge of efficiently managing ten thousand concurrent client connections on standard hardware. NGINX's revolutionary, event-driven architecture achieved this and more, fueling its rapid adoption and cementing its position as a dominant force in web infrastructure.

This definitive guide serves as your ultimate resource for understanding NGINX configuration, performance, and use cases. Whether you're a seasoned developer crafting applications, a system administrator managing complex infrastructure, a DevOps engineer optimizing deployment pipelines, or simply curious about the technologies that underpin our digital world, you'll find comprehensive insights within.

Table of Contents

- What is NGINX? Unpacking the Fundamentals

- Why NGINX Dominates: Exploring Key Advantages and Benefits

- Under the Hood: NGINX Architecture Explained

- Core Functionalities and Use Cases: What is NGINX Used For?

- NGINX vs. Apache: A Head-to-Head Performance and Feature Comparison

- Conclusion: The Enduring Power and Relevance of the NGINX Engine

- Frequently Asked Questions (FAQ) About NGINX

- Further NGINX Resources and Learning

What is NGINX? Unpacking the Fundamentals

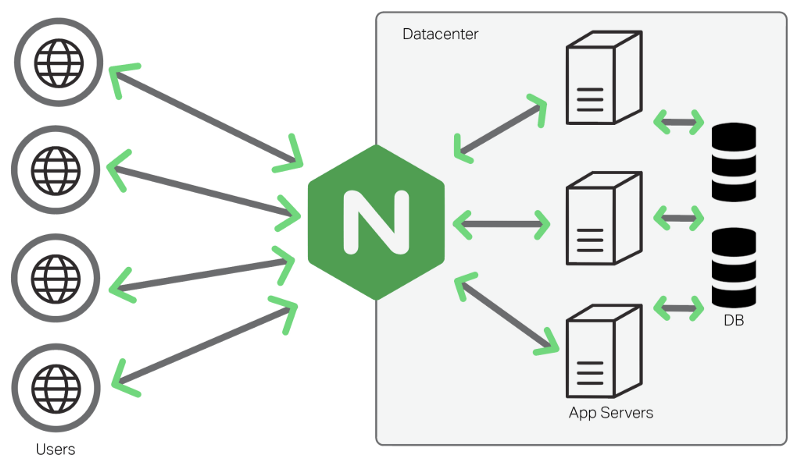

At its heart, NGINX is high-performance, open-source software initially conceived as an HTTP web server. However, its capabilities have dramatically expanded over two decades, transforming it into a multi-purpose tool indispensable for modern web architectures. Its architecture proved exceptionally well-suited for handling network traffic efficiently, leading to its adoption for various roles beyond simple web serving. Its primary functions include:

- Web Server: Serving static content (HTML, CSS, JavaScript, images, video files) directly to clients with exceptional speed and efficiency.

- Reverse Proxy: Acting as a crucial intermediary that forwards client requests to one or more backend servers, significantly enhancing security, performance, load distribution, and overall infrastructure flexibility.

- Load Balancer: Distributing incoming network traffic intelligently across multiple backend servers to improve application availability, reliability, and capacity.

- HTTP Cache: Storing copies of frequently requested web content closer to users (at the edge), dramatically accelerating content delivery and reducing load on backend systems.

- API Gateway: Providing a single, managed, and secure entry point for backend APIs, often handling vital tasks like authentication, rate limiting, request routing, and monitoring.

- Mail Proxy: Capable of proxying email protocols such as IMAP, POP3, and SMTP.

- Generic TCP/UDP Proxy: Extending its proxying and load balancing capabilities to general network traffic beyond standard web protocols.

NGINX was meticulously engineered by Igor Sysoev specifically to address the C10k problem, prioritizing high concurrency and extreme resource efficiency from its inception. This foundational design philosophy remains a core differentiator and the primary driver of its performance advantages.

It's vital to recognize the two main distributions:

- NGINX Open Source: The freely available, widely adopted version distributed under a permissive BSD-style license. It provides a robust set of core features suitable for a vast range of deployments.

- NGINX Plus: The commercial offering from F5 (which acquired Nginx, Inc.). It builds upon the open-source foundation, adding enterprise-grade features such as advanced load balancing algorithms, active health checks for upstream servers, persistent session ("sticky") support, a sophisticated real-time monitoring dashboard, an integrated Web Application Firewall (WAF) module (NGINX App Protect), built-in JWT validation, and dedicated commercial support contracts.

Why NGINX Dominates: Exploring Key Advantages and Benefits

NGINX's rise to become one of the most deployed web servers and proxies worldwide is no accident. Its immense popularity is firmly rooted in tangible technical advantages that directly address the rigorous demands of modern web applications and infrastructure:

1. Superior Performance and Scalability (The C10k Solution Realized)

This is NGINX's hallmark achievement. Traditional web servers often employed process-per-connection or thread-per-connection models. These architectures became notoriously inefficient under heavy concurrent load due to high memory consumption (each process/thread needs its own memory space) and significant CPU overhead from frequent context switching. NGINX revolutionized this with its event-driven, asynchronous, non-blocking architecture. This design allows a small, fixed number of single-threaded worker processes to handle thousands, even tens of thousands, of simultaneous connections efficiently using standard hardware resources. It gracefully manages high concurrency without the crippling resource drain experienced by older models, making it ideal for scaling web services.

2. Remarkable Resource Efficiency (Low Memory Footprint)

Performance isn't solely about speed; it's also about optimizing resource utilization. NGINX boasts an exceptionally low memory footprint, even when managing a vast number of connections. Official benchmarks and documentation often highlight its ability to handle thousands of inactive HTTP keep-alive connections using minimal memory (e.g., around 2.5MB for 10,000 inactive connections). This efficiency translates directly into lower hardware costs, higher user density per server, and improved operational margins.

3. Exceptional High Concurrency Handling

Directly stemming from its architecture, NGINX excels at managing an enormous number of simultaneous client connections without significant performance degradation. This makes it the default choice for high-traffic websites, busy APIs, real-time applications, and any service anticipating large volumes of concurrent users.

"NGINX's event-driven, asynchronous architecture is the key to its exceptional performance under heavy load, making it the ideal choice for high-traffic websites."

4. Unmatched Versatility (The "Swiss Army Knife")

NGINX is frequently lauded as the "Swiss Army knife" for web traffic management. Its inherent ability to function seamlessly as a web server, reverse proxy, load balancer, and cache—often all within a single instance—allows organizations to simplify their technology stack, reducing operational complexity, licensing costs, and potential points of failure.

5. Rich, Robust, and Modern Feature Set

NGINX supports a comprehensive array of modern web protocols and essential features. This includes native support for HTTP/2, experimental HTTP/3 (QUIC) capabilities, WebSocket proxying, gRPC proxying, robust TLS/SSL encryption with Server Name Indication (SNI) and session resumption, powerful URL rewriting capabilities (`rewrite` directive), flexible virtual hosting via `server` blocks, granular access control mechanisms, and sophisticated caching controls. Its configuration language, while distinct, offers immense power and flexibility once mastered.

6. Active Development, Wide Adoption & Strong Ecosystem

NGINX benefits tremendously from both a vibrant open-source community and the substantial commercial backing of F5. This dual support ensures continuous development, regular security patches, feature enhancements, and timely updates. Its widespread adoption translates into:

- Extensive Documentation: Official documentation is comprehensive and detailed.

- Vast Knowledge Base: Countless high-quality tutorials, blog posts, forum discussions, and Stack Overflow solutions cover nearly every conceivable configuration scenario or issue.

- Ecosystem Integration: NGINX is a standard component in numerous deployment stacks, boasting popular official Docker images and multiple widely-used Kubernetes Ingress Controller implementations.

7. Proven Robustness and Stability

In demanding production environments, NGINX is renowned for its rock-solid reliability. It's known for running stably for extended periods without requiring restarts or interventions, making it a dependable choice for mission-critical services.

Under the Hood: NGINX Architecture Explained

NGINX's groundbreaking performance isn't magic; it's the direct consequence of its innovative architectural design. Understanding this architecture is fundamental to appreciating its efficiency and capabilities.

The Event-Driven, Asynchronous, Non-Blocking Model

This is the cornerstone of NGINX's performance advantage over traditional models.

- Contrast with Blocking Models: Imagine a restaurant waiter who takes one customer's order, walks to the kitchen, and waits there idly until the food is ready before serving it and only then taking the next order. This mirrors traditional blocking, thread-per-connection servers. If many customers arrive simultaneously, you need many waiters (threads/processes), consuming significant resources (memory, CPU time for context switching) and spending much of their time simply waiting.

- NGINX's Efficient Approach: The NGINX worker process operates like a highly efficient multiplexing waiter. It takes an order (receives a request), gives it to the kitchen (initiates an I/O operation like reading a file from disk or sending a request to a backend server), and immediately moves on to take the next customer's order or handle other pending tasks (like sending data back to another client). It doesn't wait idly. When the kitchen signals that food is ready (an operating system event notification arrives, e.g., data is available on a socket or a disk read completes), the waiter swiftly picks it up and delivers it (the worker process handles the event).

- Technical Implementation: NGINX worker processes leverage highly efficient operating system event notification mechanisms like

epoll(on Linux) orkqueue(on FreeBSD/macOS). These interfaces allow a single worker thread to monitor thousands of network sockets (connections) simultaneously for various "events" (e.g., a new connection attempt, data ready to be read from a socket, a socket ready to accept data for writing). When an event occurs, the worker handles the corresponding task quickly and efficiently in a non-blocking manner. If an operation would typically block the process (like waiting for a slow disk read or a network response from a backend), NGINX initiates the operation and registers interest in its completion event with the OS via the event loop. This frees the worker process to handle other ready connections or events immediately, maximizing resource utilization and throughput.

Master and Worker Processes: A Stable Foundation

NGINX utilizes a multi-process model that enhances stability, security, and efficiency:

- Master Process: This process typically starts with root privileges. Its primary responsibilities include:

- Reading and validating the NGINX configuration files (

nginx.confand included files). - Binding to the required network ports (e.g., 80 for HTTP, 443 for HTTPS). Privileged access is often necessary for ports below 1024.

- Creating, binding socket listeners, and managing the lifecycle (starting, stopping) of the worker processes.

- Monitoring the health of worker processes and automatically restarting them if they crash unexpectedly.

- Handling control signals for administrative tasks, most notably graceful configuration reloads (

nginx -s reload) and seamless binary upgrades without service downtime.

- Reading and validating the NGINX configuration files (

- Worker Processes: These processes inherit the listening sockets from the master and typically run as a less privileged user and group (e.g.,

www-data,nginx), as specified by theuserdirective in the configuration. This is a crucial security feature. The worker processes perform the actual request handling:- Accepting new incoming connections from the shared listen sockets using the event notification mechanism.

- Reading client request headers and bodies.

- Processing requests: serving static files directly, acting as a reverse proxy to upstream servers, applying configured rules (rewrites, access control, etc.).

- Writing response headers and bodies back to clients.

- Handling all network and disk I/O asynchronously via their individual event loops.

# Basic NGINX process configuration

user www-data;

worker_processes auto; # Matches number of CPU cores

worker_rlimit_nofile 65535; # Maximum open files per worker

events {

worker_connections 4096; # Max connections per worker

multi_accept on; # Accept multiple connections

use epoll; # Efficient event processing method on Linux

}Seamless Configuration Reloads & Zero-Downtime Upgrades

A critical feature for maintaining service availability in production environments is NGINX's ability to apply configuration changes and even software upgrades without interrupting client connections:

- Graceful Configuration Reload (

nginx -s reload): When this signal is sent to the master process, it first parses and validates the new configuration. If the syntax is valid, the master starts new worker processes loaded with the updated configuration. Concurrently, it sends a "graceful shutdown" signal to the old worker processes. These old workers stop accepting new connections immediately but continue to process any requests they are currently handling until completion. Once idle, they exit cleanly. New incoming connections are promptly handled by the new worker processes running the new configuration. This sophisticated handover ensures zero dropped connections during routine configuration updates. - Binary Upgrade: NGINX also supports upgrading the NGINX executable file itself to a newer version using a similar graceful handover mechanism, orchestrated by the master process. This allows for seamless software updates without requiring a service outage.

Core Functionalities and Use Cases: What is NGINX Used For?

NGINX's remarkable versatility allows it to fulfill several crucial roles within modern web infrastructure. Its ability to combine these functions efficiently makes it a cornerstone technology. Let's explore the most significant use cases:

1. High-Performance Web Server (Especially for Static Content)

This was NGINX's original purpose, and it continues to excel in this role, particularly when serving static assets.

- Static Files: Efficiently delivering files like HTML pages, CSS stylesheets, JavaScript code, images, fonts, videos, and other assets directly from the server's filesystem with minimal processing overhead.

- Efficiency: Its event-driven architecture, combined with sophisticated use of operating system optimizations like the

sendfile()system call and Asynchronous I/O (AIO), makes static file delivery incredibly fast and resource-light compared to many other web servers. - Features: NGINX supports standard web server features like serving default index files (e.g., automatically serving

index.htmlwhen a directory/is requested), generating directory listings (autoindex on;- use with caution), and handling byte-range requests (essential for resumable downloads and video/audio seeking).

Basic Configuration Example (Serving static files):

# Example configuration usually placed in /etc/nginx/conf.d/mysite.conf

# or linked from /etc/nginx/sites-enabled/mysite

server {

listen 80; # Listen on port 80 for incoming HTTP connections

server_name my-static-site.com www.my-static-site.com; # Domain(s) this block handles

# Define the document root - the directory where website files are stored

root /var/www/my-static-site.com/public;

# Define default files to serve if a directory is requested

index index.html index.htm;

# Define how to handle incoming requests based on the URI

location / {

# Try serving the request as a file ($uri), then as a directory ($uri/),

# otherwise return a 404 Not Found error if neither exists.

try_files $uri $uri/ =404;

}

# Example: Custom location block for images with longer browser caching instructions

location /images/ {

expires 30d; # Instruct browsers to cache images aggressively (30 days)

add_header Cache-Control "public"; # Mark cache as public

# Access logs can be turned off for static assets to reduce I/O

access_log off;

}

# Optional: Define custom error pages for better user experience

error_page 404 /404.html;

location = /404.html {

# Ensures this location is only accessible internally for error handling

internal;

root /var/www/error_pages; # Specify where error pages reside

}

}2. The Powerhouse Reverse Proxy

This is arguably NGINX's most prevalent and strategically valuable use case in contemporary web architectures.

What is a Reverse Proxy? Instead of clients (browsers, mobile apps) connecting directly to your backend application servers (which might be running Node.js, Python/Django, PHP-FPM, Java/Tomcat, Ruby/Puma, Go, etc.), they connect to NGINX acting as a reverse proxy. NGINX then intelligently forwards these requests to the appropriate backend server(s) based on configured rules. Crucially, the internal backend infrastructure remains hidden from the external client.

Key Benefits:

- Load Balancing: Distribute incoming traffic across multiple backend application instances for scalability and redundancy.

- Enhanced Security: Acts as a single, hardened gateway, shielding backend servers from direct exposure to internet traffic. It hides the characteristics (OS, server versions) and IP addresses of internal servers, reducing the attack surface.

- SSL/TLS Termination: Handles the computationally expensive tasks of HTTPS encryption and decryption. NGINX communicates with clients over secure HTTPS, while potentially communicating with backend servers over faster, unencrypted HTTP within a trusted private network. This offloads CPU load from application servers.

- Caching: Stores responses received from backend servers locally. Subsequent identical requests can be served directly from the NGINX cache, dramatically improving response times and reducing load on backend systems.

- Compression: Compresses responses (using gzip or Brotli, if enabled) before sending them to clients, reducing bandwidth consumption and speeding up page load times, especially on slower connections.

- Serving Mixed Content Efficiently: Serve static assets (images, CSS, JS) directly and rapidly from NGINX itself, while proxying only dynamic requests (requiring application logic) to the appropriate backend servers.

- Centralized Access Control & Logging: Enforce authentication, authorization rules, and aggregate access logs at the edge (NGINX) rather than implementing them individually in each backend service.

- Protocol Translation: Can accept modern protocols like HTTP/2 or HTTP/3 from clients and translate requests to older protocols like HTTP/1.1 when communicating with legacy backend systems.

- Simplified Backend Management: Allows backend servers to be added, removed, upgraded, or undergo maintenance without affecting the public-facing endpoint managed by NGINX.

Basic Configuration Example (Simple reverse proxy):

# Define a named group (pool) of backend servers for proxying

upstream my_backend_app {

# Example: A Node.js application running locally on port 8080

server 127.0.0.1:8080;

# Add more backend servers here for basic load balancing (round-robin by default)

# server app_server_1.internal:8080;

# server app_server_2.internal:8080;

}

server {

listen 80;

server_name my-dynamic-app.com;

location / {

# Forward all requests for this location to the upstream group defined above

proxy_pass http://my_backend_app;

# Set essential HTTP headers for the backend application

# Ensures the backend application receives correct info about the original request

proxy_set_header Host $host; # Passes the original 'Host' header from the client

proxy_set_header X-Real-IP $remote_addr; # Passes the real client IP address

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; # Appends client IP (and any intermediate proxies)

proxy_set_header X-Forwarded-Proto $scheme; # Passes the original protocol (http or https)

# Optional: Increase timeouts if the backend application might take longer to respond

# proxy_connect_timeout 60s; # Max time to establish connection with backend

# proxy_read_timeout 60s; # Max time to wait for backend response

# proxy_send_timeout 60s; # Max time to wait for sending data to backend

}

}3. Intelligent Load Balancer

Load balancing is a specific, critical function often implemented by NGINX when configured as a reverse proxy. It involves distributing incoming client requests across a defined pool (an upstream block) of backend servers to prevent any single server from becoming overwhelmed, thereby improving overall application performance, availability, and scalability.

Core Benefits: Enhances application availability (if one server fails, others take over), reliability (provides fault tolerance), and scalability (allows adding more backend servers horizontally to handle increased load).

Distribution Algorithms (NGINX Open Source):

- Round Robin (Default): Requests are distributed sequentially to the servers listed in the upstream block. Simple, predictable, and effective for evenly performing backends.

- Least Connections (least_conn): NGINX sends the next incoming request to the server currently processing the fewest active connections. This is generally more effective than Round Robin when request processing times vary significantly between requests or servers.

- IP Hash (ip_hash): The backend server is chosen based on a hash calculation derived from the client's IP address. This ensures that requests from the same client consistently land on the same backend server. This is useful for applications that maintain session state locally on the server, although shared session storage (e.g., Redis, Memcached) is often a more robust solution.

- Generic Hash (hash $variable [consistent]): Allows load balancing based on a hash of a user-defined variable (e.g., $request_uri, $http_user_agent, a specific header value). The optional consistent parameter uses the Ketama consistent hashing algorithm, which minimizes the redistribution of requests to different servers when the list of upstream servers changes (e.g., adding or removing a server).

Health Checks: Ensuring traffic isn't sent to unhealthy backend servers is vital.

- Passive (Built-in in Open Source): NGINX automatically marks a server as temporarily unavailable if connection attempts fail or time out, based on the max_fails and fail_timeout parameters configured for each server directive within the upstream block. Traffic is diverted from the failed server for the fail_timeout duration.

- Active (NGINX Plus Feature): NGINX Plus can be configured to proactively send periodic, out-of-band health check requests (e.g., requesting a specific /health endpoint) to backend servers to determine their availability independently of actual client traffic. This provides faster detection of failures and recovery.

Basic Configuration Example (Upstream block for load balancing):

http {

# Define the upstream group containing the backend servers

upstream my_web_cluster {

# Uncomment one algorithm directive below to override the default Round Robin

# least_conn; # Use Least Connections algorithm

# ip_hash; # Use IP Hash algorithm for session persistence

# Define the backend servers

server backend1.example.com; # Server 1, default weight is 1

server backend2.example.com weight=3; # Server 2 handles 3x the traffic of server 1

server backend3.example.com backup; # This server only receives requests if all primary servers fail

server backend4.example.com max_fails=3 fail_timeout=30s; # Custom passive health check parameters

# Keepalive connections to upstream servers can improve performance

# keepalive 32; # Number of idle keepalive connections per worker process

}

server {

listen 80;

server_name www.example.com;

location / {

proxy_pass http://my_web_cluster; # Proxy requests to the load balanced upstream group

# Always include essential proxy headers

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

# Optional: Set read timeout for upstream connection

# proxy_read_timeout 90s;

}

}

}4. Efficient Content Caching (HTTP Cache)

NGINX can function as a highly effective, disk-based HTTP cache when operating as a reverse proxy, significantly improving website and application performance.

How it Works: When NGINX receives a request intended for a proxied backend server, it first checks its configured local cache storage (defined by the proxy_cache_path directive).

- Cache HIT: If a valid, non-expired copy of the requested resource (identified by a cache key, typically based on the URL) exists in the cache, NGINX serves the response directly from its cache to the client. This completely bypasses the need to contact the backend server.

- Cache MISS/EXPIRED: If the resource is not found in the cache, or if the cached version has expired (based on HTTP caching headers like Cache-Control: max-age or Expires, or NGINX's own proxy_cache_valid settings), NGINX forwards the request to the appropriate backend server. When the backend responds, NGINX delivers this response to the client and simultaneously stores a copy in its cache for potential future requests.

Key Benefits:

- Drastically Reduced Latency: Serving content directly from NGINX's local disk cache (or potentially memory, depending on OS caching) is significantly faster than fetching it across the network from potentially slower or geographically distant backend application servers or databases.

- Significantly Reduced Backend Load: By handling repetitive requests for the same content directly, NGINX shields application servers, databases, and other backend resources from unnecessary load. This frees up backend resources to handle unique or dynamic requests more efficiently and can lead to substantial infrastructure cost savings.

http {

# Define the cache storage: path, directory levels, keys zone name & memory size,

# max total disk cache size, inactive timer (remove items not accessed for 60m),

# use_temp_path=off recommended to avoid copying files between filesystems.

proxy_cache_path /var/cache/nginx levels=1:2 keys_zone=my_app_cache:100m max_size=10g inactive=60m use_temp_path=off;

upstream my_backend_app {

server 127.0.0.1:8080;

}

server {

listen 80;

server_name www.example.com;

location / {

proxy_pass http://my_backend_app;

# Enable caching using the defined zone 'my_app_cache'

proxy_cache my_app_cache;

# Define what components make up the unique cache key (customize if needed)

proxy_cache_key "$scheme$request_method$host$request_uri";

# Set default cache validity for different response codes

proxy_cache_valid 200 301 302 10m; # Cache OK, Moved Permanently, Found for 10 mins

proxy_cache_valid 404 1m; # Cache Not Found for 1 minute

# Improve resilience: Serve stale (expired) content if backend errors or times out

# 'updating' allows serving stale while fetching a new version in the background

proxy_cache_use_stale error timeout invalid_header updating http_500 http_502 http_503 http_504;

# Prevent multiple identical requests hitting the backend simultaneously on a cache miss (Thundering Herd problem)

proxy_cache_lock on;

# Add a response header for easy debugging of cache status

add_header X-Proxy-Cache $upstream_cache_status;

# Always include essential proxy headers

proxy_set_header Host $host;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto $scheme;

# Optionally bypass cache for logged-in users etc.

# proxy_cache_bypass $cookie_sessionid;

}

}

}5. Secure and Performant API Gateway

With the widespread adoption of microservices architectures, managing external access to numerous backend APIs becomes complex and critical. NGINX and NGINX Plus are frequently employed to build robust, performant API Gateways.

- Single Entry Point: Provides a unified, consistent endpoint for all external API consumers, simplifying discovery and consumption.

- Authentication & Authorization: Can perform essential security checks at the edge. This includes validating API keys, authenticating using HTTP Basic Auth, validating JSON Web Tokens (JWTs) (built-in with auth_jwt in NGINX Plus, or via Lua/third-party modules in Open Source), or using the auth_request directive to delegate authentication/authorization decisions to an external microservice.

- Rate Limiting: Protects backend API services from accidental overload or malicious abuse by limiting the number of requests clients can make within a given time period (requests per second/minute). Configured using limit_req_zone and limit_req. Connection limiting (limit_conn_zone, limit_conn) can also restrict concurrent connections per client IP.

- Request Routing: Intelligently routes incoming API calls to different backend microservices based on criteria like the request path (URI), HTTP method, headers, or query parameters.

- Response Transformation (Basic): While dedicated API Gateway solutions often offer more advanced capabilities, NGINX can perform basic modifications to requests or responses, such as adding/removing headers. More complex transformations often involve Lua scripting.

- Security Enforcement: Applies consistent security policies across all APIs, handles SSL/TLS termination, and can integrate with Web Application Firewalls (like ModSecurity or NGINX App Protect) for deeper threat protection.

NGINX vs. Apache: A Head-to-Head Performance and Feature Comparison

For many years, the Apache HTTP Server (often simply called "Apache" or httpd) was the undisputed leader among web servers. While Apache has evolved significantly (notably introducing its own event-driven processing modules like mpm_event), NGINX was designed from the ground up specifically for high concurrency and generally maintains an edge in raw performance and resource efficiency, particularly under heavy concurrent load. Here's a comparative look:

| Feature | NGINX | Apache HTTP Server | Notes & Considerations |

|---|---|---|---|

| Core Architecture | Event-Driven, Asynchronous, Non-Blocking (Master + Fixed Workers) | Primarily Process/Thread-based (Prefork MPM - older default) or Event-Driven (Worker/Event MPMs - modern defaults) | NGINX's native event-driven design is often inherently more resource-efficient per connection. Apache added event models later to compete, which are vastly better than prefork but sometimes less optimized than NGINX's core loop. |

| Performance (Static Content) | Generally Excellent. Very low CPU and memory usage per connection. Utilizes OS features like sendfile efficiently. | Good, especially with Event/Worker MPMs. Can be very fast. | NGINX typically exhibits superior performance and uses significantly less memory when serving large numbers of static files concurrently due to its architectural focus on non-blocking I/O. |

| Performance (Dynamic Content) | Acts as a Reverse Proxy, forwarding requests to external application processors (PHP-FPM, uWSGI, Puma, Node.js, etc.). Performance heavily depends on the backend, but the proxying itself is highly efficient. | Can embed interpreters directly (e.g., mod_php for PHP) using Prefork MPM, or proxy externally (similar to NGINX) using mod_proxy_fcgi with Event/Worker MPMs. | The NGINX + PHP-FPM combination is a very common and highly performant setup for PHP applications. Apache's embedded mod_php (with prefork) can simplify initial setup but often consumes much more memory under load and doesn't scale concurrency as well. Proxying (FastCGI) is generally preferred for performance in Apache too. |

| Memory Usage | Generally Lower per connection. Scales very well with increasing connections. | Can be significantly higher, especially with the Prefork MPM (each process has a larger footprint). Event/Worker MPMs are much better but can still use more memory than NGINX under similar loads. | NGINX's event-driven model inherently scales better in terms of memory consumption as the number of connections grows. |

| Configuration | Uses its own block-based, declarative syntax (nginx.conf). Configuration is generally centralized. Does not support per-directory .htaccess style configuration files. | Uses XML-like directives (httpd.conf, included files). Traditionally relies heavily on decentralized .htaccess files for per-directory overrides. | NGINX configuration is often perceived as cleaner and more performant (no per-request filesystem checks for .htaccess), but can have a steeper initial learning curve. Apache's .htaccess offers significant flexibility (especially in shared hosting) but can negatively impact performance and make centralized management harder. |

| Flexibility/Modules | Highly flexible via a rich system of core, standard, and third-party modules. Growing support for dynamic module loading. Core strength is in high-performance web serving, proxying, caching, and load balancing features. | Extremely flexible with a massive, mature ecosystem of modules covering a vast array of functionalities. Strong support for dynamic module loading (LoadModule). The .htaccess system provides runtime configuration flexibility. | Apache historically had an edge in the sheer breadth of available modules and the ease-of-use provided by .htaccess for certain tasks. NGINX excels and is often considered superior in configuring complex reverse proxy, load balancing, and caching scenarios. |

| Primary Use Cases | High-traffic/high-concurrency websites, Reverse Proxying, Load Balancing, Content Caching, API Gateways, Serving Static/Media files efficiently, Container/Kubernetes environments (Ingress). | Shared Hosting environments (due to .htaccess), applications requiring specific legacy Apache modules, simpler setups potentially using embedded interpreters like mod_php, situations prioritizing decentralized configuration flexibility. | While significant overlap exists and both are capable servers, these areas reflect common deployment patterns based on their respective strengths. |

Key Takeaways for Choosing:

- Choose NGINX when: Top priorities include handling high concurrency, maximizing performance under load, optimizing resource (especially memory) efficiency, implementing sophisticated reverse proxy, load balancing, or caching setups, efficiently serving static content, or deploying within containerized environments. You prefer or can adapt to centralized configuration management.

- Choose Apache when: You absolutely require the per-directory configuration flexibility of .htaccess files (very common in traditional shared hosting), need specific Apache-only modules not available for NGINX, or perhaps prefer the perceived simplicity of embedded interpreters like mod_php for smaller sites (while being mindful of the performance trade-offs compared to FastCGI).

- Combine Them (Hybrid Approach): A popular and powerful architectural pattern involves using NGINX as the front-end server. In this setup, NGINX handles all incoming client connections, serves static files directly, terminates SSL/TLS, performs caching, and acts as a load balancer/reverse proxy, forwarding only the necessary dynamic requests to a backend Apache server (which might be running specific applications or modules). This leverages the strengths of both servers: NGINX for high-performance connection handling and static content, Apache for specific application compatibility if needed.

Conclusion: The Enduring Power and Relevance of the NGINX Engine

From its focused origins tackling the C10k challenge to its current status as a multifaceted linchpin of the modern web stack, NGINX has consistently proven its exceptional power, unparalleled efficiency, and remarkable adaptability. Its core event-driven, asynchronous architecture delivers outstanding performance and scalability under the pressure of high concurrency, setting it apart from many alternatives. Furthermore, its ability to seamlessly transition between critical roles – high-performance web server, intelligent reverse proxy, robust load balancer, efficient HTTP cache, and secure API gateway – provides immense architectural flexibility and operational efficiency.

Whether you are deploying a simple static website, managing complex microservice landscapes through a Kubernetes Ingress Controller, optimizing content delivery, or securing API traffic, NGINX offers the robust feature set and raw processing power required to handle today's most demanding internet workloads. While the web technology landscape continuously evolves, with new tools emerging and community dynamics shifting (as evidenced by recent forks like Angie and freenginx), NGINX remains a dominant, highly relevant, and often indispensable technology. Its widespread adoption, extensive documentation, strong community support, and proven track record in production solidify its ongoing importance.

Mastering NGINX configuration, understanding its architectural advantages, and leveraging its diverse capabilities is no longer just a niche skill; it has become a fundamental competency for professionals involved in building, deploying, scaling, or managing modern web applications and infrastructure. The powerful combination of performance, efficiency, and versatility ensures that NGINX will undoubtedly continue to power a significant portion of the internet for the foreseeable future.

Frequently Asked Questions (FAQ) About NGINX

Q: Why is NGINX so popular? Why does everyone seem to use it?

A: NGINX's immense popularity stems from a powerful combination of factors:

- High Performance: Especially under high concurrent load, its event-driven architecture uses significantly fewer resources (CPU, memory) per connection than traditional models.

- Scalability: Handles tens of thousands of simultaneous connections efficiently on standard hardware.

- Low Memory Footprint: Very resource-efficient, lowering hardware costs.

- Versatility: Excels as a web server (especially static files), reverse proxy, load balancer, HTTP cache, and API gateway, often combining these roles.

- Stability & Reliability: Proven track record in high-traffic production environments.

- Strong Ecosystem: Excellent documentation, active open-source community, robust commercial support (NGINX Plus), and integration with tools like Docker and Kubernetes.

Q: Is NGINX free to use?

A: Yes, the core NGINX Open Source software is completely free and distributed under a permissive, BSD-style open-source license. You can download, use, modify, and distribute it freely. There is also a commercial version, NGINX Plus, which is sold by F5 on a subscription basis. NGINX Plus includes all open-source features plus additional enterprise-focused capabilities (advanced load balancing algorithms, active health checks, integrated WAF, JWT validation, live monitoring dashboard) and comes with dedicated commercial support.

Q: Can NGINX completely replace Apache? When might I still use Apache?

A: Technically, yes. NGINX can handle all the core functions of a web server that Apache does: serving static files, proxying dynamic requests (e.g., to PHP-FPM), managing SSL/TLS, handling virtual hosts, etc. Many modern, high-traffic websites run exclusively on NGINX. However, you might still choose Apache (or use it behind an NGINX proxy) if:

- You absolutely need the flexibility of per-directory .htaccess configuration files (common in traditional shared hosting environments).

- You rely on specific Apache-only modules that don't have an equivalent for NGINX.

- Your team has deep existing expertise primarily with Apache configuration.

Using NGINX as a front-end reverse proxy to Apache is a common hybrid approach that leverages NGINX's connection handling strengths while retaining Apache for specific backend tasks if necessary.

Q: How can I check if a website is using NGINX or Apache?

A: The simplest way is often to look at the Server HTTP response header. You can use your browser's developer tools (usually under the "Network" tab, select a request, and look at the response headers) or use online tools that check HTTP headers for a given URL. If the website's administrator hasn't hidden it, the Server header might explicitly say "nginx" or "Apache". However, many administrators disable or modify this header (server_tokens off; in NGINX) for security reasons (obscurity). Also, Content Delivery Networks (CDNs) like Cloudflare or Akamai often sit in front of the origin server and will show their own server name in the header, masking the underlying technology.

Q: Is NGINX considered safe and secure?

A: The NGINX software itself has a strong security track record and is actively maintained, with vulnerabilities typically patched quickly. However, the actual security of any NGINX deployment depends critically on secure configuration and diligent ongoing maintenance. An out-of-the-box or poorly configured NGINX instance can be insecure. Essential security practices include: keeping NGINX updated, enforcing HTTPS with strong protocols/ciphers, configuring system firewalls correctly, implementing rate limiting, securing file permissions (especially config files and SSL keys), running worker processes as a non-privileged user, considering a WAF, preventing information leakage, and following the other best practices outlined in the "Securing Your NGINX Deployment" section of this guide. Security is a continuous effort, not a one-time setup.

Q: What is NGINX primarily used for on Linux systems?

A: Linux is the most common and natural operating system environment for deploying NGINX due to its performance characteristics and deep integration with Linux networking capabilities (like epoll). On Linux, NGINX is used for its full range of functions:

- Hosting websites (serving static files, reverse proxying to dynamic backends like PHP-FPM, Python/uWSGI, Node.js).

- Acting as a reverse proxy to enhance security, performance (caching, SSL offload), and manageability of web applications.

- Load balancing HTTP/S traffic across multiple backend application servers.

- Caching frequently accessed content to accelerate delivery and reduce backend load.

- Serving as an API gateway to manage, secure, and route API traffic.

- Occasionally, as a mail proxy (SMTP/POP3/IMAP) or a generic TCP/UDP proxy/load balancer for non-HTTP services (databases, message queues).

It typically runs as a standard system service managed via systemd (systemctl) or older init systems.

Further NGINX Resources and Learning

- Official NGINX Documentation: https://nginx.org/en/docs/ - The definitive reference guide for all directives, modules, and core concepts.

- NGINX Blog (by F5): https://www.nginx.com/blog/ - Features tutorials, technical deep dives, use case examples, product news, and best practice articles.

- NGINX Community Support Page: https://www.nginx.org/en/support.html - Provides links to community mailing lists, forums, and other support channels.

- NGINX Unit Documentation: https://unit.nginx.org/ - The official documentation specifically for the NGINX Unit application server.

- F5 NGINX Plus Product Page: https://www.nginx.com/products/nginx/ - Detailed information on the features and benefits of the commercial NGINX Plus offering.

- DigitalOcean Community Tutorials (NGINX Tag): https://www.digitalocean.com/community/tags/nginx - Offers a large collection of high-quality, practical tutorials and guides covering various NGINX setup and configuration tasks.

- Let's Encrypt: https://letsencrypt.org/ - The non-profit Certificate Authority providing free SSL/TLS certificates, essential for enabling HTTPS.

- Mozilla SSL Configuration Generator: https://ssl-config.mozilla.org/ - An invaluable tool for generating secure, up-to-date SSL/TLS configurations for NGINX (and other servers) based on desired compatibility levels.